Storage related performance has other considerations then file copy

Aka Windows Storage issues: Several flavors of the same Ice Cream

Aka : xamples where file copy illustrates how a test can the problem.

There are a few cases which illustrate how file copy is not the best way to show you have a performance issue. I will endeavor to illustrate This point below. I have 4 cases where this assumption proves wrong. This does not include hardware. There could be an underlying hardware issue. However, many claim the disk is bad, when we have a test which just shows how to exhaust cache or illustrate a design issue. These are not hardware issues!

Storage IO and File Copy

File copy is not a one-dimensional topic. The Storage stack is more complex then it appears

Figure 1. The Storage Stack.

I would like to present cases where assumptions are wrong about “Cause of latency” , related to the claim of “slow file copy operations prove the disk is slow”. All the cases are examples where the test violates the design of the storage architecture in some way, or there is an assumption built into the assertion of slow file copy, slow disk etc.

In all cases, using copy and paste, is a single threaded operation. This factor limits the speed of disk IO, simply because of copy method. This problem is called a testing methodology error.

Second, all three cases (and most cases) are due to cache filling up, and the writes flushed, directly to disk. This is the least efficient method of copy and the performance is very low. Most solutions are designed to use cache to improve speeds. The summary of this paper is these tests either are 1) Self-limiting or 2) the test simply overruns the cache, creating a performance issue in the test methodology.

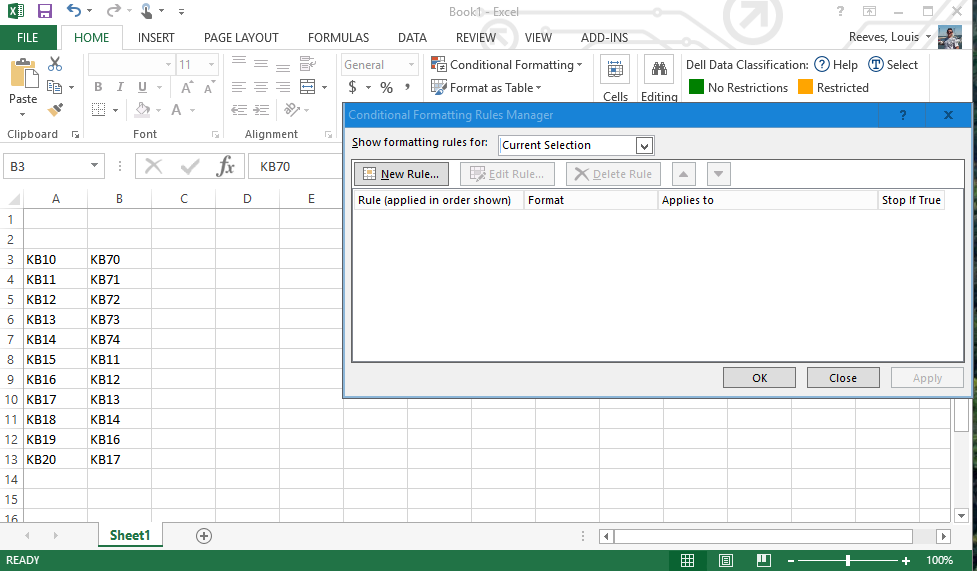

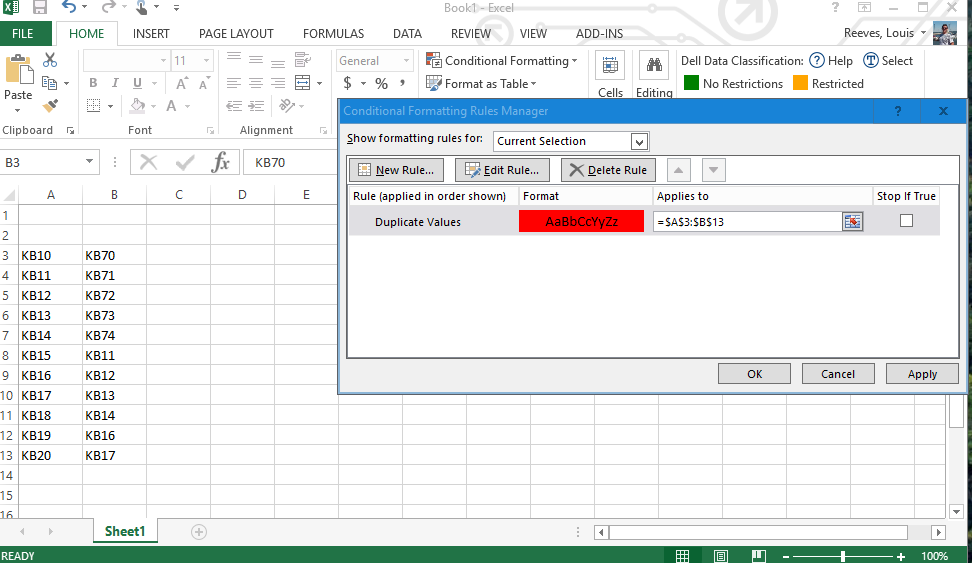

#1 (Server 20xx) The test simply maxis out the cache of the storage solution.

Problem: Slow file copy – Claim: Disk is bad

Solution: Windows 201X | Storage: Software raid or Sata controller | Copy: Folder with 1 500gb iso and 200gb small files

Result:

Figure 2- File copy going to zero.

What’s going on here? Well you can see the copy goes to nearly zero over and over. The professional support agent will tell you the cache is filling up. What does that mean? It means copying 700 GB worth of data, it is taking all the cache resources, and it’s a bottle neck.

Was the solution designed for this type of copying? To begin with, any large data copy should be using multi-thread copy (robocopy), but we must investigate the disk sub-system to see what’s happening here.

What we really want to know is what is happening every time the graph goes near 0? We need only two performance counters. Open Windows Performance monitor and hit the + sign:

- Disk transfers/second

- disk idle time

Figure 3. Use Performance monitor counter.

What you will notice – When the graph goes to zero, the disk idle time goes to 0! Hmm busy disks… ok. What else. Disk transfers per second ~ IOPS. You can calculate your own iops, but top speed for a lower end storage subsystem is about 60-80 iops. The graph below tells us all we need to know:

Figure 4. Zero Idle time and high disk transfers/sec.

You see, in this case things are working as designed. The problem is the customer is using file copy and found the combination which will exhaust the cache. This means the writes get flushed to disk and this speed issue will be persistent for the whole copy.

Proof. If you want proof of this, look no farther than RAMMAP.

Empty the standby list – See the screenshot below. See the copy take off like a rocket? If you had hardware cache clearing fast enough, this would be happening automatically! This is your problem not enough cache to sustain the copy!

Figure 5. Empty Standby List for copy to take off like a rocket.

To conclude, the needed change here is 1) Change the test or 2) Get a faster disk subsystem with more cache. Hint: DiskSpeed at the end of this article.

#2 (S2d)The Test Circumvents the design of the Storage subsystem (S2d)

Problem: Slow file copy – Claim: Disk is bad

Solution: S2d Azure Stk HCI | Storage: H310 Storage Pool | Copy: Folder with 1 500gb iso and 200gb small files

Result:

Figure 6. See Saw Behavior when copy to or from the Pool.

In this case, we have an insane fast amount of possible IOPS, but that does not show in this example. What is happening is the same as above case. The difference is we have entire drives dedicated to cache, for S2d. But it’s compounded by specific design parameters. S2d is designed around the virtual machine, Hyper-V and RDMA. You get insane transfer speeds between VM’s , across nodes.

The perfect expression of this case, is the customer who purchased S2d but does not use Hyper-V. If you use S2d as your storage, but not using Virtual machines, you’re missing the purpose. Without Hyper-V and VMs, you have no RDMA and your leverage to the solution is lost. S2d is contained, so its on a hyper speed rail “within the solution”.

If you experiment with this file copy, you will find out you can copy from CSV to CSV fine. This is what the solution was designed to do. The issue is if you copy from your PC to the CSV, ½ of this connection is not optimized. Your PC has a 1gb nic S2d has more then 1 25GB nic . Even host to host is not thrilling.

In this case, the customer needs to move all the workloads to Hyper-V VMs. Then do the testing between VMS only. Then the use case Is fulfilled, and this solution can be used as intended.

Again, testing should use disk speed with multiple threads. That section is at the end.

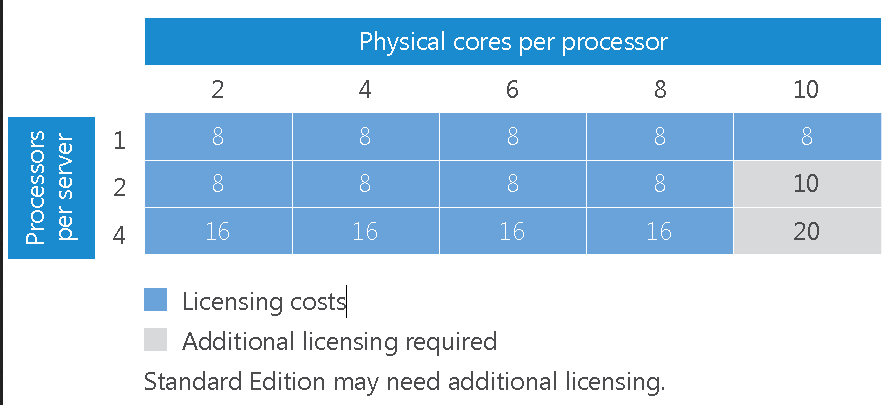

#3 (SQL VM)Comparing VM speed to a Physical Server (ISCSI)

Problem: Slow file copy – Claim: Disk is bad

Solution: SQL 19xx | Storage: Hyper-V on ISCSI | Copy: SQL query rebuilding or backup

Result:

The results can vary widely. The main takeaway is the perception may be slow to very slow, compared to hardware. Let’s find out why.

This last case is a bit of an outlier. Not really, it’s another flavor of the same ice cream. It’s not so much a file copy, but still involves the claim that the storage is the cause. In place of file copy, we have a SQL database doing the writing. The situation is SQL has been put in a VM. The expectation is the file copy will be just as fast.

Here is some reading about Storage and QUEUE Management

- Little’s Law (1)

- Storport Queue Management – Windows drivers | Microsoft Docs (2)

- best practice and deployment (3)

- Recommendation is to equally spread the data (4)

- database into separate files (5)

Since this is a more complex case, I am going to summarize what is going on here. The answer is in the documents above:

- Hyper-V cluster with a CSV only affords the Storeport driver (see figure 1) a single QUEUE.

- To make SQL faster, the data should be broken into multiple Virtual disks. There is one Queue Per virtual disk (VD)

- 3 Virtual disks are three times more processing. See document 2.

- If you have multiple virtual disks, and the whole load is on one virtual disk, then that is like having one VD. See Document 5.

- There is a formula to calculate how much cache is needed. This must be built into the design. (document 1).

Hopefully you can see what is happening. The SQL is running slow, due to Storport queue limitation. This is the same situation as case #1. Its like a cache buffer.

The solution to this case is to break the SQL up, just like you would in a physical server:

- Place a tmp.db in a standalone NVME

- Place the Logs in a single CSV. (Document 3)

- Peace the database in as man CSV as necessary to meet speed requirements . (document 5)

These steps will ensure SQL is as fast as possible.

Are we building a case which is easy to understand? If you want to know if you’re really having a physical disk issue. To know this, you need to baseline test and find out what not production, multithreaded reads and writes look like. Which is our next section!

#4 (SQL slow Queries disks fault) Standardized SQL database

Problem: Slow SQL due to disk – Claim: Disk is bad

Solution: SQL 19xx | Storage: any | Copy: SQL query rebuilding or backup

For a SQL database, there are design and deployment elements, that will take all available resources and make the disk look like its faltering.

The only way to really troubleshoot SQL, is to get off your specific workload and get onto a scientifically reproductible workload.

There is no case example here because it’s been a while since I have seen this. But, its no different then the VM case, except when SQL is rebuilding indexes, or backing up, it may be pulling a lot of IOPS. We don’t know what that number should look like for every Corporate database.

How to proceed?

I had designed a “fill database creation” template, that populates a million rows in a new database. I find this is a good baseline and would complete in less than 20 minutes on any fast SQL server.

The file and article are included with this document. Its scientific and reproducible.

Apologies for not having the details, but it belongs on this list. This is a head and shoulders example of how the file copy, or index writes are not the whole picture to troubleshooting.

Baseline testing Ideas

Baseline is all about the scientific Method. I won’t beat a horse to badly here. (no horses were harmed, during the filming of this video).

Below is an example where someone will call into support, after running this command, claiming the disk is too slow.

- diskspd –b8K –d30 –o4 –t8 –h –r –w25 –L –Z1G –c20G D:\iotest.dat > DiskSpeedResults.txt

what I am trying to drive here is don’t run some complex command where you can’t isolate the results. Run the simplest command, testing one aspect at a time.

Introduce a Baseline. Anything is better than nothing

The Above diskspd command is complex and long. Come up with some simple tests and run more of them, over time. Second, test your commands, on a laptop, or desktop, with a specific Ram, Storage, and Processor Profile. Once you record all the results on the client machine, duplicate the test in the Virtual Machine. Make sure it’s the only Virtual Machine Running. Make sure nothing is running on the Host but this one VM, with specific resources.

Below I am not giving you results. I am just giving you the commands, along with some Instructions on how to use DSKSPD. I am also leaving you with Articles that VMware and Microsoft Hyper-V use, when asking for baseline testing. Notice, how many little requirements they have?

If you read these articles, they are forcing you to pin things so get specific results. This is because you are calling in, asking for, or expecting maximums. To get maximums, you must design you vm, and pin your VM to the maximum performance tier!

There is a reason for this! We are all trying to be scientific.

Tests to establish a Baseline.

- .\diskspd -c100M -d20 c:\test1 d:\test2

- .\diskspd -c2G -b4K -F8 -r -o32 -W30 -d30 -Sh d:\testfile.dat

- .\diskspd -t1 -o1 -s8k -b8k -Sh -w100 -g80 c:\test1.dat d:\test2.dat

- .\diskspd.exe -c5G -d60 -r -w90 -t8 -o8 -b8K -h -L

- .\diskspd.exe -c10G -d10 -r -w0 -t8 -o8 -b8K -h -L d:\testfile.dat

- .\scriptname.ps1

- Same as above- second location

- .\Diskspd -b8k -d30 -Sh -o8 -t8 -w20 -c2G d:\iotest.dat

With this battery of testing, you will get a good feel for your performance. Once Baseline takes place, put your workloads in production. Now you can compare the baseline to events like windows update, or when you add applications.

The truth is performance issues are 80% more likely to be due to changes in the system, rather then hardware. When hardware is going bad, you generally know it ahead of time. You get an alert!

Robocopy if nothing else

If you can’t do the type of testing referenced in this document, you will need to use robocopy and at least run the copy with multiple threads. Depending on the situation, you might have to disable cache, or pin something to a hot tier, but you must structure the test, so you get what you are after. Any random file copy may not capture the facts, to decision.

There are some robocopy guis available

Robocopy GUI Download (RoboCopyGUI.exe) (informer.com)

https://sourceforge.net/projects/robomirror/

https://sourceforge.net/projects/eazycopy-a-robocopy-gui/

Otherwise, you need to know the switches. This document is long already. It’s a google search away. I use the GUI.

A few other Details

Standardized file copy

If you’re doing Case #1 above, you may find the file type affects that case Here is a way to manually pre-create the files if desired

- fsutil file createnew d:\iotest.dat 20000000

- fsutil file createnew d:\iotest.dat 2000000000

- fsutil file createnew d:\iotest.dat 20000000000

Here is all of the best articles on storage, and IO online right now. I was surprised that so many of Storage Performance Needs are all in one place.

VM Performance

see your actual VM numbers. try using this tool Run this tool on the host, while using diskspd on your VM.

DO not run more than one instance of Diskspd at once!! This will invalidate your tests!

Other tools

Jperf and Dskspeed

Conclusion

I want to thank you for reading my article. It turns out I had a lot more to say, which is really for reference material. Below I mostly address tools. Keep this article for those references alone!!! But its right to conclude here, so you don’t think the rest has any additional cases.

What I have presented are just three examples where File copy was not going to be a good predictor of storage issues. If you really want to know if your storage is the issue, use disk speed and do not run in production. Its just as likely that all the other processes running on the server are the problem, then just the storage.

The takeaway is use simple tools to collect the data, and collect isolated, scientifically significant samples. If you do that, you will be able to tell a bad disk from a bad configuration!

Standardized SQL database

For a SQL database, support cannot just accept that storage is slow, when we don’t know what the SQL database is doing. There are design and deployment elements that will take all available resources and make the disk look like its faltering.

The only way to really troubleshoot SQL is to get off your specific workload and get onto a scientifically reproductible workload.

I had designed a fill database creation that populates a million rows in a new database. I find this is a good baseline and would complete in less then 20 minutes on any fast SQL server.

The file and article is included with this document. Its scientific and reproducible.

Appendix A. What all those DiskSpd Letters and numbers mean

- -t2: This indicates the number of threads per target/test file. This number is often based on the number of CPU cores. In this case, two threads were used to stress all of the CPU cores.

- -o32: This indicates the number of outstanding I/O requests per target per thread. This is also known as the queue depth, and in this case, 32 were used to stress the CPU.

- -b4K: This indicates the block size in bytes, KiB, MiB, or GiB. In this case, 4K block size was used to simulate a random I/O test.

- -r4K: This indicates the random I/O aligned to the specified size in bytes, KiB, MiB, Gib, or blocks (Overrides the -s parameter). The common 4K byte size was used to properly align with the block size.

- -w0: This specifies the percentage of operations that are write requests (-w0 is equivalent to 100% read). In this case, 0% writes were used for the purpose of a simple test.

- -d120: This specifies the duration of the test, not including cool-down or warm-up. The default value is 10 seconds, but we recommend using at least 60 seconds for any serious workload. In this case, 120 seconds were used to minimize any outliers.

- -Suw: Disables software and hardware write caching (equivalent to -Sh).

- -D: Captures IOPS statistics, such as standard deviation, in intervals of milliseconds (per-thread, per-target).

- -L: Measures latency statistics.

- -c5g: Sets the sample file size used in the test. It can be set in bytes, KiB, MiB, GiB, or blocks. In this case, a 5 GB target file was used.

Appendix B – DiskSpd Xmas time.

Command line and parameters · microsoft/diskspd Wiki · GitHub

| Parameter` | Description |

| -? | Displays usage information for DiskSpd. |

| -ag | Group affinity – affinitize threads in a round-robin manner across Processor Groups, starting at group 0. This is default. Use -n to disable affinity. |

| -ag#,#[,#,…] | Advanced CPU affinity – affinitize threads round-robin to the CPUs provided. The g# notation specifies Processor Groups for the following CPU core #s. Multiple Processor Groups may be specified and groups/cores may be repeated. If no group is specified, 0 is assumed. Additional groups/processors may be added, comma separated, or on separate parameters. Examples: -a0,1,2 and -ag0,0,1,2 are equivalent. -ag0,0,1,2,g1,0,1,2 specifies the first three cores in groups 0 and 1. -ag0,0,1,2 -ag1,0,1,2 is an equivalent way of specifying the same pattern with two -ag# arguments. |

| -b<size>[K|M|G] | Block size in bytes or KiB, MiB, or GiB (default = 64K). |

| -B<offset>[K|M|G|b] | Base target offset in bytes or KiB, MiB, GiB, or blocks from the beginning of the target (default offset = zero) |

| -c<size>[K|M|G|b] | Create files of the specified size. Size can be stated in bytes or KiBs, MiBs, GiBs, or blocks. |

| -C<seconds> | Cool down time in seconds – continued duration of the test load after measurements are complete (default = zero seconds). |

| -D<milliseconds> | Capture IOPs higher-order statistics in intervals of <milliseconds>. These are per-thread per-target: text output provides IOPs standard deviation. The XML output provides the full IOPs time series in addition (default = 1000ms or 1 second). |

| -d<seconds> | Duration of measurement period in seconds, not including cool-down or warm-up time (default = 10 seconds). |

| -f<size>[K|M|G|b] | Target size – use only the first bytes or KiB, MiB, GiB or blocks of the specified targets, for example to test only the first sectors of a disk. |

| -f<rst> | Open file with one or more additional access hints specified to the operating system: r: the FILE_FLAG_RANDOM_ACCESS hint, s: the FILE_FLAG_SEQUENTIAL_SCAN hint and t: the FILE_ATTRIBUTE_TEMPORARY hint. Note that these hints are generally only applicable to cached I/O. |

| -F<count> | Total number of threads. Conflicts with -t, the option to set the number of threads per file. |

| -g<bytes per ms> | Throughput per-thread per-target is throttled to the given number of bytes per millisecond. This option is incompatible with completion routines. (See -x.) |

| -h | Deprecated but still honored; see -Sh. |

| -i<count> | Number of I/Os (burst size) to issue before pausing. Must be specified in combination with -j. |

| -j<milliseconds> | Pause in milliseconds before issuing a burst of I/Os. Must be specified in combination with -i. |

| -I<priority> | Set I/O priority to <priority>. Available values are: 1-very low, 2-low, 3-normal (default). |

| -l | Use large pages for I/O buffers. |

| -L | Measure latency statistics. Full per-thread per-target distributions are available using the XML result option. |

| -n | Disable default affinity. (See -ag.) |

| -N<vni> | Specifies the flush mode used with memory mapped I/O: v: uses the FlushViewOfFile API, n: uses the the RtlFlushNonVolatileMemory API, i: uses RtlFlushNonVolatileMemory without waiting for the flush to drain. |

| -o<count> | Number of outstanding I/O requests per-target per-thread. (1 = synchronous I/O, unless more than one thread is specified with by using -F.) (default = 2) |

| -O<count> | Total number of I/O requests per shared thread across all targets. (1 = synchronous I/O.) Must be specified with -F. |

| -p | Start asynchronous (overlapped) I/O operations with the same offset. Only applicable with two or more outstanding I/O requests per thread (-o2 or greater) |

| -P<count> | Print a progress dot after each specified <count> [default = 65536] of completed I/O operations. Counted separately by each thread. |

| -r<alignment>[K|M|G|b] | Random I/O aligned to the specified number of <alignment> bytes or KiB, MiB, GiB, or blocks. Overrides -s. |

| -R[text|xml] | Display test results in either text or XML format (default: text). |

| -s[i]<size>[K|M|G|b] | Sequential stride size, offset between subsequent I/O operations in bytes or KiB, MiB, GiB, or blocks. Ignored if -r is specified (default access = sequential, default stride = block size). By default each thread tracks its own sequential offset. If the optional interlocked (i) qualifier is used, a single interlocked offset is shared between all threads operating on a given target so that the threads cooperatively issue a single sequential pattern of access to the target. |

| -S[bhmruw] | This flag modifies the caching and write-through modes for the test target. Any non-conflicting combination of modifiers can be specified (-Sbu conflicts, -Shw specifies w twice) and are order independent (-Suw and -Swu are equivalent). By default, caching is on and write-through is not specified. |

| -S | No modifying flags specified: disable software caching. Deprecated but still honored; see -Su. This opens the target with the FILE_FLAG_NO_BUFFERING flag. This is included in -Sh. |

| -Sb | Enable software cache (default, explicitly stated). Can be combined with w. |

| -Sh | Disable both software caching and hardware write caching. This opens the target with the FILE_FLAG_NO_BUFFERING and FILE_FLAG_WRITE_THROUGH flags and is equivalent to -Suw. |

| -Sr | Disable local caching for remote file systems. This leaves the remote system’s cache enabled. Can be combined with w. |

| -Sm | Enable memory mapped I/O. If the -Smw option is specified then non-temporal memory copies will be used. |

| -Su | Disable software caching, for unbuffered I/O. This opens the target with the FILE_FLAG_NO_BUFFERING flag. This option is equivalent -S with no modifiers. Can be combined with w. |

| -Sw | Enable write-through I/O. This opens the target with the FILE_FLAG_WRITE_THROUGH flag. This can be combined with either buffered (-Sw or -Sbw) or unbuffered I/O (-Suw). It is included in -Sh. Note: SATA HDDs will generally not honor write through intent on individual I/Os. Devices with persistent write caches – certain enterprise flash drives and most storage arrays – will complete write-through writes when the write is stable in cache. In both cases, -S/-Su and -Sh/-Suw will see equivalent behavior. |

| -t<count> | Number of threads per target. Conflicts with -F, which specifies the total number of threads. |

| -T<offset>[K|M|G|b] | Stride size between I/O operations performed on the same target by different threads in bytes or KiB, MiB, GiB, or blocks (default stride size = 0; starting offset = base file offset + (<thread number> * <offset>). Useful only when number of threads per target > 1. |

| -v | Verbose mode |

| -w<percentage> | Percentage of write requests to issue (default = 0, 100% read). The following are equivalent and result in a 100% read-only workload: omitting -w, specifying -w with no percentage and -w0. CAUTION: A write test will destroy existing data without issuing a warning. |

| -W<seconds> | Warmup time – duration of the test before measurements start (default = 5 seconds). |

| -x | Use I/O completion routines instead of I/O completion ports for cases specifying more than one I/O per thread (see -o). Unless there is a specific reason to explore differences in the completion model, this should generally be left at the default. |

| -X<filepath> | Use an XML profile for configuring the workload. Cannot be used with any other parameters. The XML output <Profile> block can be used as a template. See the diskspd.xsd file for details. |

| -z[seed] | Set a random seed to the specified integer value. With no -z, seed=0. With plain -z, seed is based on system run time. |

| -Z | Zero the per-thread I/O buffers. Relevant for write tests. By default, the buffers are filled with a repeating pattern (0, 1, 2, …, 255, 0, 1, …). |

| -Zr | Initialize the write buffer with random content before every write. This option adds a run-time cost to the write performance. |

| -Z<size>[K|M|G|b] | Separate read and write buffers and initialize a per-target write source buffer sized to the specified number of bytes or KiB, MiB, GiB, or blocks. This write source buffer is initialized with random data and per-I/O write data is selected from it at 4-byte granularity. |

| -Z<size>[K|M|G|b],<file> | Specifies using a file as the source of data to fill the write source buffers. |

Table 1. Command line parameters

Event parameters

The parameters in Table 2 specify events that can be used to start, end, cancel, or send notifications for a DiskSpd test run.

| Parameter | Description |

| -ys<eventname> | Signals event <eventname> before starting the actual run (no warm up). Creates a notification event if does not exist. |

| -yf<eventname> | Signals event <eventname> after the test run completes (no cool-down). Creates a notification event if does not exist. |

| -yr<eventname> | Waits on event <eventname> before starting the test run (including warm up). Creates a notification event if does not exist. |

| -yp<eventname> | Stops the run when event <eventname> is set. CTRL+C is bound to this event. Creates a notification event if does not exist. |

| -ye<eventname> | Sets event <eventname> and quits. |

Table 2. Event Parameters

Event Tracing for Windows (ETW) parameters

Using the parameters in Table 3, you can have DiskSpd display data concerning events from an NT Kernel Logger trace session. Because event tracing (ETW) carries additional overhead, this is turned off by default.

| Parameter | Description |

| -e<q|c|s> | Use a high-performance timer (QPC), cycle count or system timer respectively (default = q, high-performance timer (QPC)). |

| -ep | Use paged memory for the NT Kernel Logger (default = non-paged memory). |

| -ePROCESS | Capture process start and end events. |

| -eTHREAD | Capture thread start and end events. |

| -eIMAGE_LOAD | Capture image load events. |

| -eDISK_IO | Capture physical disk I/O events. |

| -eMEMORY_PAGE_FAULTS | Capture all page fault events. |

| -eMEMORY_HARD_FAULTS | Capture hard fault events. |

| -eNETWORK | Capture TCP/IP, UDP/IP send and receive events. |

| -eREGISTRY | Capture registry events. |

Table 3. Event Tracing for Windows (ETW) parameters

Size conventions for DiskSpd parameters

Several DiskSpd options take sizes and offsets specified as bytes or as a multiple of kilobytes, megabytes, gigabytes, or blocks: [K|M|G|b].

Conventions used for referring to multiples of bytes are a common source of confusion. In networking and communication, these multiples are universally in powers of ten: e.g. a ‘GB’ is 109 (1 billion) bytes. By comparison, a ‘GB’ of RAM is universally understood to be 230 (1,073,741,824) bytes. Storage has historically been in a gray area where file sizes are spoken of in powers of two, but storage system manufacturers refer to total capacity in powers of ten. Since storage is always accessed over at least one (SAS, SATA) or more (PCI, Ethernet, InfiniBand) communication links, this adds complexity into the already challenging exercise of understanding end-to-end flows.

The iB notation is an international convention which unambiguously refers to power of two-based sizing for numbers of bytes, as distinct from powers of ten which continue to use KB/MB/GB notation.

- 1KiB = 210 = 1,024 bytes

- 1MiB = 1024 KiB = 220 = 1,048,576 bytes

- 1GiB = 1024 MiB = 230 = 1,073,741,824 bytes

This notation will be used in this documentation. DiskSpd reports power of two-based quantities with the iB notation in text results. XML results are always reported in plain bytes.

To specify sizes to DiskSpd:

- bytes: use a plain number (65536)

- KiB: suffix a number with ‘K’ or ‘k’ (e.g. 64k)

- MiB: suffix a number with ‘M’ or ‘m’ (e.g. 1m)

- GiB: suffix a number with ‘G’ or ‘g’ (e.g. 10g)

- multiples of blocks: suffix a number with ‘b’ (e.g. 5b) – multiplying the block size specified with -b

Fractional sizes with decimal points such as 10.5 are not allowed.