I realize I have written several Hyper-V articles lately. They all come for the unique perspective of technical support. I now see, I have been trying to put together some material to help understand how these new 2016 Clusters are from the 2012 Clusters from the 2008 Clusters.

So I want to express three clear goals in this paper. I want to define a list of items you may want to read, to make your Cluster as supportable as possible. Second, I simply want to speak to the Importance of your NIC hardware purchases, as it relates to the past and current Stance of Microsoft, with respect to Network requirements, of Hyper-V cluster setups. Finally, I just summarize some of the command line setting you may look at if you don’t have the optimal NIC setup for a Cluster.

Disclaimers

-

-

- I agree with one who commented below, this is getting old now, and may not be best advice. I agree. However, I wanted to make this article, to document that you are not trapped into using software teams. This was written just as software teaming was getting off the ground. We has alot of customers who were not getting the performance they wanted. For example, an LBFO cluster is not a SET cluster. Your not going to Get RDMA speeds if you have 3 1GB nics and that’s your cluster. Be aware that alot has changed from 2012 to 2016. Take the time to experiment if you have that luxury.

- Read on only to see what the past opinions were, and that there are threads of truth in stopping to think, is hardware teaming going to work? Be aware that you may have two choices, depending on what you are trying to do.

- Try Both ways if you have any doubts.

- Disclaimer (agent), While there are items in this discussion, that may not be good advice, for your particular Infrastructure, or your particular situation, I am writing this from a familiar perspective, that Admins, and Designers are approaching me from. Namely this is an “I want it all, and I want it all to work now!” type of design steps.

-

-

- Translation: (customer needs)Give me the settings that make the fullest use of my server, give me the most VMS, with the most possible resources, and I want to Live Migrate them all day long. Furthermore, I want to be able to host conference in an RDP session, on any one of my Cluster Nodes, and not have any problems.

- The bottom line is this was written for 2010- 2015 clustering. In 2018 you have to know exactly what kind of cluster your setting up, and dont even try it unless you have some 10 GB nics. this is the way things have evolved in 2018. The SET and LBFO clusters are what you likely want to set up. The SET cluster is the best choice but you need good 10GB networks.

Facts and Common issues in today’s and yesterday’s Hyper-V CSV Clusters

With this disclaimer in mind let us proceed. First things first. I am providing this list based on my 10 years with Hyper-V and Clustering, along with the reading and video information I have come across.:

-

-

- Never Use the (Hyper-V) shared Network Adapter as a NIC in the Host Server.

- Never Software Team with the NDIS driver Installed from your NIC vendor.

- Software teaming is fine for most workloads, unless you’re having latency problems. The answer is Hardware Teaming, or Vice Versa

- Don’t put SQL servers on your Hyper-V host.

- VM QUEUING can be a problem. Try your workloads with and without VM Queuing and see which works best for your situation.

- TCP Offload is not supported for Server 2012 Cluster Teams. Check the other settings here–

- The Preferred software Team setting is Hyper-V PORT virtualized, switch Independent teaming is best. This is where we are at today. Remember, you had access to these statements in current documentation.

- If you Use the Multiplexer Driver as the Virtual Machine NIC, do not turn around and share that NIC with the Hyper-V Host. This is not pretty.

- Use Jumbo Frames, QOS etc., where you’re supposed to, according to the current guidelines

- Piggy Back off of #8 is that today’s Clusters with Hyper-V, are a balance of Isolation and Bandwidth networks. There is no hard fast rule on how many Network adapters you need.

- You cannot just say a node is too slow or fast. When you first install the server, you need to perform clearly laid out, baseline testing, where similar results can be obtained for your server, in pristine condition, with no other workloads. The Same is true for Virtual Machines.

- You cannot run a Hyper-V CSV cluster, with all your NICS in the TEAM. You need at least 3 networks in any version of Hyper-V. This is

- Cluster Only (Cluster Communication)

- Cluster and Client (Management etc.…)

- No Cluster Communication. (ISCSI)

- Run Cluster Validation- If your updates don’t match across your cluster, you need to get all your nodes to match, before the cluster will work properly.

- Clustering only recognizes one NIC per sub-net, when you add multiple NICS to cluster

- Back up applications and Antivirus, may have compatibility issues, disable both, and see if the issues disappear.

- Network Considerations

-

- The Binding Order and DNS must match your current MS documentation. DO not miss this.

- Cluster Setup now adds rules to the firewall automatically. If you are using Symantec Endpoint. These Firewall rules can serve as your port list to add to Symantec firewall.

- You can Now Sysprep with Cluster Role installed now for Server 2012.

- Your NETFT is enabled at the physical NIC, Where you find your IPV4 properties. Do not Disable it.

- So if you are setting up converged clusters, you now have to rely on cluster validation to tell you, if you have enough networks to effectively set up your cluster. Resolve any of the network issues here in validation

- CSV traffic includes Metadata Updates and Live migration data, as well as failure recovery (IE no storage connectivity) you cannot break this traffic into isolated streams.

- CSV needs NTLM and SMB- don’t disable either.

- ISCI Teams now work with MPIO and Jumbo Frames. Jumbo Needed for ISCSI

- Using multiple Nic Brands Is now preferred.

- This series of Articles covers topics I did not go into In Depth. Topics Include:

- Mapping the OSI model

- VLANS

- IP routing

- Link Aggregation and Teaming

- DNS

- Ports, Sockets and Applications

- Bindings

- Load Balancing, Algorithms

Cluster nodes are multi-homed systems. Network priority affects DNS Client for outbound network connectivity. Network adapters used for client communication should be at the top in the binding order. Non-routed networks can be placed at lower priority. In Windows Server 2012/2012R2, the Cluster Network Driver (NETFT.SYS) adapter is automatically placed at the bottom in the binding order list

Network Evolution and common sense Network needs

This section is really addressing how we build clusters today. For example, See recently how I wrote a paper on using the old Isolation rules for a simple 2016 cluster, based off the old method of deployment. This method is elegant and works well, will little maintenance needed.

For 2012, and forward, we have the new design which is detailed in the Tech net article, “Network Recommendations for a Hyper-V Cluster in Windows Server 2012”. In this paper, it Includes the modern setup, using the a software team, and scripted Network Isolation

This paper interleaves these two philosophies, at least that was the intent or message, you are always using one or the other as a guiding principle. Insofar as you have the technical reasoning to do so. what I mean is, if you have 10GB nics, you may fully move to the 2012 method. If you have like 3 1GB nics, you are leaning on the 2008 article to explain to the customer why live migration would not work properly.

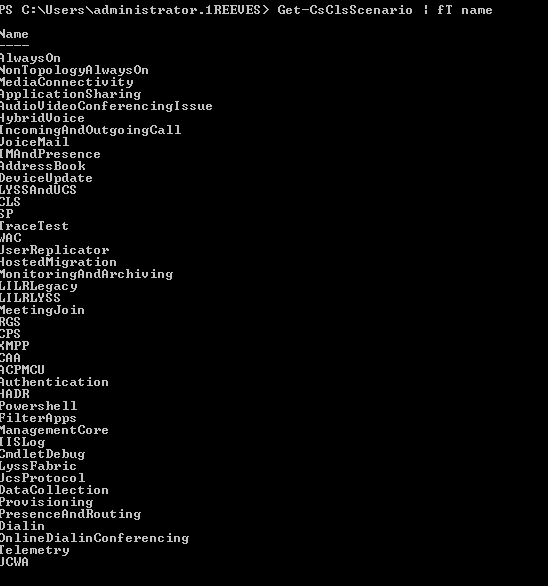

Get logging information for Hyper-V and clustering from this article

The quick history of the CSV cluster as follows:

2008

Heartbeats/Intra Cluster Communications -in some documentation (1GB)

CSV I/O Redirection (1GB)

VM Network (1GB)

Cluster Network (1GB)

Management Network (1GB)

ISCSI Network – (1GB)

2012 and 2016

Heartbeats – Network Health monitoring in some documentation (QOS IMPORTANT) (10GB)

Intra Cluster Communications (QOS IMPORTANT) (10GB)

CSV I/O Redirection (Bandwidth Important) (10GB)

ISCSI Network – Not registered in DNS (10GB)

This is where you can clearly see how new clusters in 2016, just don’t have the same specifications. The recommendation here is to adjust the Cluster Networks, by the number of network adapters, and what the throughput is. If the NIC setup looks like the 2008 cluster, then apply 2008 network setup guidelines. If the cluster has 2 or more 10GB nics, then treat it as the newer 2016 logic. This has worked well for me for some time now. This will ensure that you get the best Isolation and throughput for your customer.

So as you can see, the Number of NICS is going down, but the NIC SPEED is going up. To make matters more difficult, Microsoft Now states that to be optimized, a CSV cluster will have a combination of Isolation and Bandwidth. They are no longer able to lean on the hard 5 to 7 NIC requirement that once was the norm. For proof of this, you will need to watch this video entitled. ” Fail over Cluster Networking essentials. ”

So really, Support may not be giving you a great explanation as to why your CSV cluster is slow. It is really closely related to the Network Design. Does your Network look more like a 2008 cluster, or a 2012 or 16 cluster? This will give you justification as to why a cluster would be slow or fast.

Server 2012 Requirements are here, along with a basic script for Embedded Teams

In addition to the script above, you also have control over the heartbeat, and other things like priority of the various Cluster NICS and timeouts.

Settings you may look at to change if needed

The rest of this article, just shows you some config settings, if you find you have to make a manual change. With a 2016 cluster, they are saying its all automatic, and should not be changed.

While you can make changes to the following. The recommendation is to leave the settings alone. The automatic settings should adjust to the proper, situational network changes:

Configure Cluster Heart Beating

(Get-Cluster). SameSubnetDelay = 2

The Above command Is an example of how you set the following variables. They are posted below with their Default values

- SameSubnetDelay (1 Second)

- SameSubnetThreshold (5 heartbeats)

- CrossSubnetDelay (1 Second)

- CrossSubnetThreshold (5 heartbeats)

The above setting Is for regular clustering. For Hyper-V clustering, consider the following defaults

- SameSubnetThreshold (10 heartbeats)

- CrossSubnetThreshold (20 heartbeats)

If you go more than 10 to 20 on these two settings, the documentations says the overhead starts to interfere, more than the benefit. FYI.

This Step Below is only for allowing the creation of the cluster on a Slow network. Set the value of SetHeartbeatThresholdOnClusterCreate to 10, for a value of 10 seconds.

HKLM\SYSTEM\CurrentControlSet\Services\ClusSvc\Parameters

add DWORD value SetHeartbeatThresholdOnClusterCreate

Configure Full Mesh HeartBeat

(Get-Cluster). PlumbAllCrossSubnetRoutes = 1

Other Important changes to change Cluster Setup Parameters

Please Be advised, All the following syntax, has been duplicated from this publicly available Microsoft Article:

Change Cluster Network Roles ( 0=no cluster, 1=Only cluster communication, 3=Client and Cluster Communication)

- (Get-ClusterNetwork “Cluster Network 1”). Role =3

- Get-ClusterNetwork | ft Name, Metric, AutoMetric, Role

- ( Get-ClusterNetwork “Cluster Network 1” ).Metric = 900

- ( Get-ClusterNetwork “Cluster Network 1” ).AutoMetric = $true

Set Quality of Service Policies (values 0-6) ( Must be enabled on all the nodes in the cluster and the physical network switch)

- New-NetQosPolicy “Cluster”-Cluster –Priority 6

- New-NetQosPolicy “SMB” –SMB –Priority 5

- New-NetQosPolicy “Live Migration” –LiveMigration –Priority 3

Set Bandwidth policy (relative minimum bandwidth policy) (It is recommended to configure Relative Minimum Bandwidth SMB policy on CSV deployments)

- New-NetQosPolicy “Cluster” –Cluster –MinBandwidthWeightAction 30

- New-NetQosPolicy “Live Migration” –LiveMigration –MinBandwidthWeightAction 20

- New-NetQosPolicy “SMB” –SMB –MinBandwidthWeightAction 50

If you need to add a Hyper-V replica

- Add-VMNetworkAdapter –ManagementOS –Name “Replica” –SwitchName “TeamSwitch”

Set-VMNetworkAdapterVlan -ManagementOS -VMNetworkAdapterName “Replica” –Access –VlanId 17

Set-VMNetworkAdapter -ManagementOS -Name “Replica” -VmqWeight 80 -MinimumBandwidthWeight 10# If the host is clustered – configure the cluster name and role

* (Get-ClusterNetwork | Where-Object {$_.Address -eq “10.0.17.0”}).Name = “Replica”

*(Get-ClusterNetwork -Name “Replica”).Role = 3

From <https://technet.microsoft.com/en-us/library/dn550728(v=ws.11).aspx>

Configure Live Migration Network

- # Configure the live migration network

Get-ClusterResourceType -Name “Virtual Machine” | Set-ClusterParameter -Name MigrationExcludeNetworks -Value ([String]::Join(“;”,(Get-ClusterNetwork | Where-Object {$_.Name -ne “Migration_Network”}).ID))

From <https://technet.microsoft.com/en-us/library/dn550728(v=ws.11).aspx>

Other Commands

- Enable VM team Set-VMNetworkAdapter -VMName <VMname> -AllowTeaming On

- Restrict SMB – New-SmbMultichannelConstraint -ServerName “FileServer1” -InterfaceAlias “SMB1”, “SMB2”, “SMB3”, “SMB4”